The European Commission is the executive branch of the European Union, responsible for proposing legislation, implementing decisions, upholding the EU treaties, and managing the day-to-day business of the EU. It operates as a cabinet government, with 27 members of the Commission (one from each member state) led by a President. The Commission's main roles include developing strategies and policies, enforcing EU laws, and negotiating international agreements on behalf of the EU.

Within the European Commission, the Directorate-General for Competition (DG COMP) plays a critical role. DG COMP is responsible for establishing and implementing competition policy to ensure fair competition within the EU internal market. This includes enforcing antitrust laws, overseeing mergers and acquisitions, and preventing monopolistic practices and state aid that could distort competition. DG COMP works to protect consumers and businesses by promoting innovation, ensuring a level playing field, and enhancing economic efficiency.

I worked at DG COMP, contributing to the Commission's efforts to maintain competitive markets and foster economic growth across the European Union.

- WebsiteEU, DG COMP Website

- Contributions

At the European Commission, I worked as a software architect on three projects, with a wide range of responsibilities that showcased my expertise and leadership in software development. My key responsibilities included:

- Management of Non-Functional Requirements and Project Definition: Using Enterprise Architect, I was responsible for identifying and managing non-functional requirements, ensuring that each project was clearly defined and aligned with organizational goals.

- Task Prioritization: Utilizing Jira, I prioritized tasks effectively to ensure that the most critical elements were addressed in a timely manner.

- Management of Task Dependencies: I used Jira to manage dependencies between tasks, ensuring smooth progress and minimizing delays.

- Technology Selection: I was instrumental in selecting the appropriate technologies for each project, ensuring that they were well-suited to meet the project’s needs.

- Team Member Profile Definition: I defined the profiles and roles of team members, ensuring that the team had the necessary skills and expertise to succeed.

- Inter-Team Collaboration: I facilitated collaboration between different teams whose projects needed to be integrated, ensuring seamless integration and cooperation.

- Quality Control and Testing: I defined quality controls and testing protocols, including unit tests using technologies such as JUnit or Google Test/Valgrind, depending on the programming language.

- CI/CD Systems Implementation: I defined and implemented Continuous Integration and Continuous Deployment systems using tools like Bamboo and SonarQube.

- Software Development Methodologies: I applied Test-Driven Development (TDD) and Behavior-Driven Development (BDD) practices to ensure high-quality software development.

- Agile Methodology: I utilized SCRUM as the primary methodology for project management, fostering an agile and efficient development process.

Project 1: Definition of Two RESTful APIsThe first project involved defining two RESTful APIs that implemented the Richardson Maturity Model Level 3, incorporating HATEOAS. These APIs were designed as platforms for the secure exchange of legal documents in anti-cartel proceedings.

- Internal API: The first API was intended to serve the internal staff of the European Commission.

- External API: The second API served as a platform for external personnel.

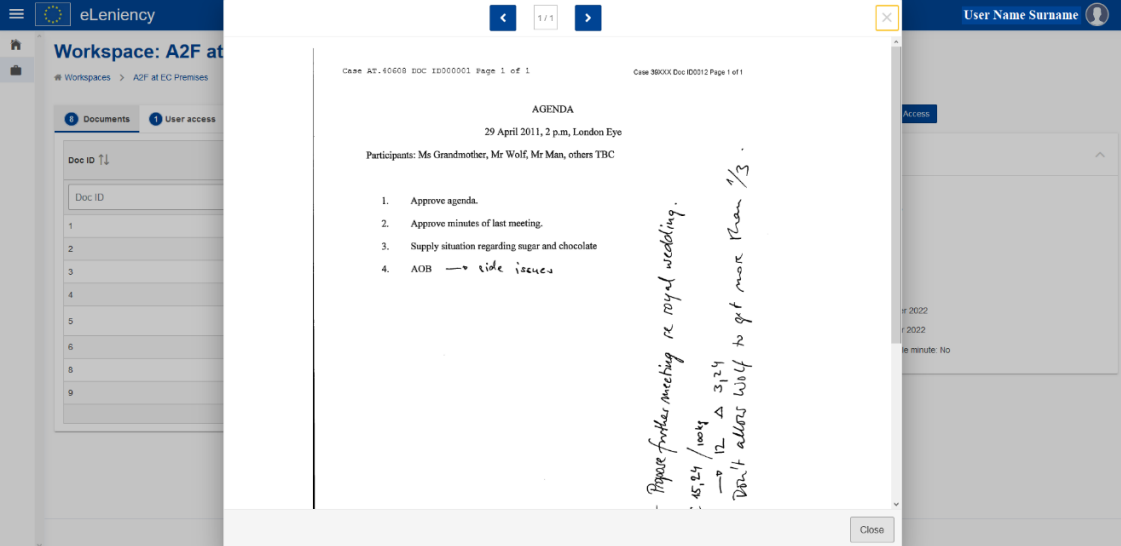

Project LinksDocumentatione-leniency documentationTechnologiesJavaSpring SecuritySpring MVCSpring DataSpring DocumentationSpring IoCJPAEclipselinkWebLogic 12Oracle DBMongoDBSwaggerJUnitAngularJSSeleniumMavenGITCASJiraJUnitBambooSonarQubeTDDBDDProject Images Project 2: E-Confidenciality

Project 2: E-ConfidencialityIn the second project, I played a pivotal role as the software architect, overseeing the development of a sophisticated system for negotiating confidential versions of legal documentation in anti-cartel proceedings. My contributions were instrumental in establishing and assigning dynamic verification groups responsible for managing online legal processes. These groups, composed of internal personnel, were verified through the Central Authentication Service (CAS), a system previously implemented under my guidance.

I architected the system that governs the status of each document using the Status design pattern, which allows for the reversal of changes, ensuring the integrity and accuracy of legal documents throughout the negotiation process. My expertise ensured that any modifications could be undone, maintaining the documents' fidelity.

Additionally, I selected and integrated Oracle DB and MongoDB as the database engines, capitalizing on their strengths to efficiently manage the project's complex data requirements. I ensured that the implementation followed the Richardson Maturity Model Level 3, incorporating HATEOAS (Hypermedia as the Engine of Application State) principles. This approach guaranteed a robust, scalable system capable of supporting the intricate workflows involved in anti-cartel legal proceedings.

Through my leadership and technical expertise, I significantly enhanced the project's capability to provide a secure, reliable, and user-friendly platform for negotiating sensitive legal documents, thus bolstering the efficacy of anti-cartel enforcement actions. My work as the software architect was crucial in achieving the project's goals and ensuring its success.

Project LinksDocumentatione-confidenciality documentationTechnologiesAngularJSAnsibleBDDBambooCASDockerEclipselinkEnterprise ArchitectGITIntelliJ IDEAJPAJUnitJavaJiraMavenMongoDBOracle DBSeleniumSonarQubeSpring DataSpring DocumentationSpring IoCSpring MVCSpring SecuritySwaggerTDDWebLogic 12Project 3: Semantic index of legal documentsThe third project, proposed by me, centers around developing a sophisticated system for semantic indexing of legal documents. I acted as the architect for this project, deeply involving myself in the programming process to ensure its success. The project utilizes clustering techniques, specifically the K-Means algorithm, applied to the dataset of legal documents from the anti-cartel department. The indexing process is integrated into a microservice designed with the gRPC framework, implemented in C++14 to ensure maximum efficiency and performance.

The architecture of these microservices follows the Command Query Responsibility Segregation (CQRS) paradigm, deployed on a service pool to guarantee resilience and rapid response times. The semantic search capability is powered by a neural network trained on the anti-cartel legal documents dataset, utilizing the TensorFlow framework. Additionally, I developed a complementary service in Java, which leverages this semantic search tool to enhance its functionality.

This dual-service approach not only facilitates high-speed semantic searches but also ensures robust and scalable indexing of complex legal documents. The system's design emphasizes resilience, efficiency, and accuracy, making it a vital tool for legal document management and retrieval in the anti-cartel department. My initiative and hands-on involvement in both the architecture and programming were crucial to the project's success.

TechnologiesAngularJSAnsibleBambooC++CUDACassandraDockerEclipselinkEnterprise ArchitectGITGoogle TestGroovyIntelliJ IDEAJPAJUnitJavaJiraMavenMicroservicesOpenCLOracle DBSeleniumSonarQubeSpring DataSpring DocumentationSpring MVCSpring SecuritySwaggerTensorFlowValgrindWebLogic 12gRPCProject 4: Development of a Renewable Energy Distribution SystemThe fourth project involves the development of a renewable energy distribution system within the electrical grid, aiming to normalize and stabilize electric consumption over time to maximize the utilization of renewable energies. I played a significant role in this project, contributing extensively to both its architecture and implementation.

The system integrates a diverse array of technologies to achieve its goals. At the core of the communication system lies a Kafka-based messenger cluster, where communication with devices is managed through Avro schemas. An intermediary "gateway" is implemented using RaspberryPi, responsible for sending telemetry data and receiving commands. This gateway system is developed in Go, utilizing the ModBus communications protocol in both its serial and TCP versions, with TCP communications secured via a VPN.

The software management for this project is handled through the BalenaIO platform, which facilitates the deployment and operation of the gateway devices. Continuous Integration and Continuous Deployment (CI/CD) processes are managed using CircleCI, ensuring a seamless and efficient development workflow.

In addition, I created a statistics parser in Groovy for the ingestion of telemetry data, providing critical insights into energy consumption patterns and system performance. My involvement was pivotal, overseeing the architecture, implementation, and integration of various components to ensure the project's success.

My hands-on approach and technical expertise were instrumental in addressing the challenges of renewable energy distribution. My contribution in leveraging advanced technologies and developing robust communication protocols underscores my significant role in this innovative project aimed at enhancing the stability and efficiency of renewable energy usage within the electrical grid.

TechnologiesGoUnit TestBalenaIORaspberryPIModbusPythonMakefileDockerKubernetesGCPAzureIoTHubKafkaAvro SchemasAnsibleElastic SearchKibanaMakefileJiraGroovy